Testing tools for AI systems

ChatGPT has triggered a new hype around Artificial Intelligence, the possibilities of AI are impressive. At the same time, quality assurance and control of AI systems is becoming increasingly important - especially when they take on responsible tasks. This is because chatbot results are based on huge amounts of data on texts from the Internet. In the process, however, systems like ChatGPT only calculate the most probable [...]

ChatGPT has triggered a new hype around Artificial Intelligence, the possibilities of AI are impressive. At the same time, quality assurance and control of AI systems is becoming increasingly important - especially when they take on responsible tasks. This is because chatbot results are based on huge amounts of data on texts from the Internet. However, systems such as ChatGPT only calculate the most probable answer to a question and output this as a fact. But what testing tools exist to measure the quality of the texts generated by ChatGPT, for example?

KI test catalog

Testing tools in use

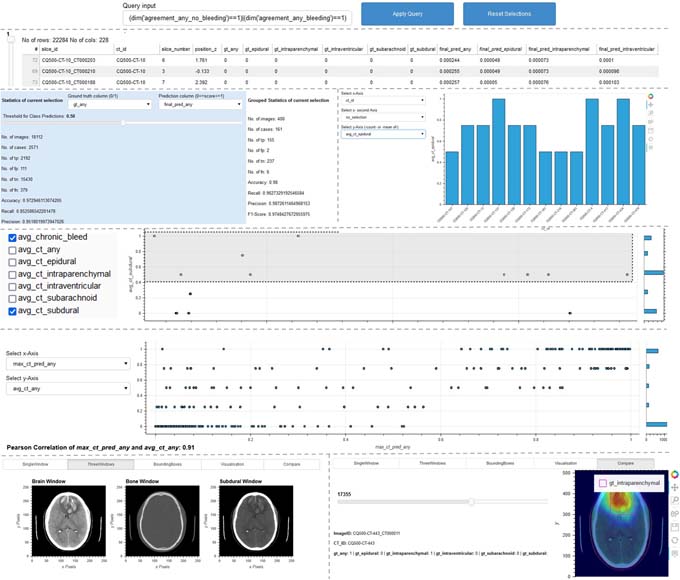

From April 17 to 21, researchers from Fraunhofer IAIS will also be presenting various testing tools and procedures at the joint Fraunhofer booth in Hall 16, Booth A12 at Hannover Messe 2023 that can be used to systematically examine AI systems for vulnerabilities throughout their lifecycle and safeguard against AI risks. The tools support developers and testing institutes in systematically evaluating the quality of AI systems and thus ensuring their trustworthiness. One example is the "ScrutinAI" tool. It enables auditors to systematically search for weaknesses in neural networks and thus test the quality of AI applications. One concrete example is an AI application that recognizes anomalies and diseases on CT images. The question here is whether all types of anomalies are recognized equally well or whether some are better and others worse. This analysis helps inspectors to assess whether an AI application is suitable for its intended context of use. At the same time, developers can also benefit by recognizing shortcomings in their AI systems at an early stage and taking appropriate improvement measures, such as enriching the training data with specific examples.

Source and further information: Fraunhofer IAIS

This article originally appeared on m-q.ch - https://www.m-q.ch/de/prueftools-fuer-ki-systeme/